False information about the new coronavirus has continued to spread around the world, just like the illness itself. In answer, major technology companies have created new tools and rules to reduce misinformation and provide facts about the virus.

Health officials and others have welcomed the new efforts. They have long urged tech companies to do more to prevent the spread of false information online.

Andy Pattison is head of digital solutions for the World Health Organization (WHO). He told The Associated Press that some major tech companies have taken stronger action to reduce coronavirus misinformation.

For the past two years, Pattison has been urging companies like Facebook to take more aggressive action against false information about vaccinations. Now, he says his team spends a lot of time identifying misleading coronavirus information online. Sometimes, Pattison contacts officials at Facebook, Google and YouTube to request that they remove such misinformation.

A man opens the Facebook page on his computer to fact check coronavirus disease (COVID-19) information, in Abuja, Nigeria March 19, 2020. Picture taken March 19, 2020. REUTERS/Afolabi Sotunde

In some cases, coronavirus misinformation has led to deadly results. Last month, Iranian media reported more than 300 people had died and 1,000 were sickened after eating methanol, a poisonous alcohol. Information about the substance being a possible cure for coronavirus had recently appeared on social media.

In the American state of Arizona, a man died and his wife became seriously ill after taking chloroquine phosphate, a product that some people mistake for the anti-malaria drug chloroquine.

The U.S. Food and Drug Administration, FDA, says chloroquine phosphate is used to treat disease in fish kept at home. It is not meant to be taken by humans. Chloroquine has been used to treat malaria and some other conditions in humans. It is being studied as a possible treatment for COVID-19, the disease caused by the new coronavirus.

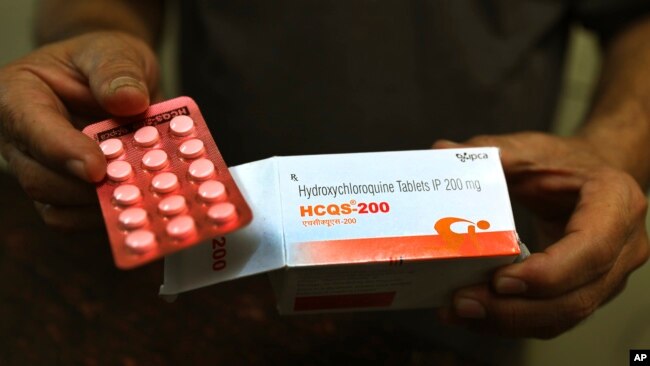

In this Thursday, April 9, 2020 file photo, a chemist displays hydroxychloroquine tablets in New Delhi, India. Chloroquine and the related drug hydroxychloroquine have been pushed by President Donald Trump after some early tests suggested the drugs might

U.S. President Donald Trump and some of his supporters have said they think chloroquine could be an effective treatment against the virus. Similar claims about chloroquine were widely publicized and shared on social media.

However, health officials have warned that the drug has not been proven to be safe or effective in treating or preventing COVID-19. Twitter and Facebook decided to take steps to reduce the spread of information about such unproven treatments.

Twitter removed a post by Trump’s personal lawyer Rudy Giuliani that described hydroxychloroquine, which is related to chloroquine, as “100 percent effective” against coronavirus. Twitter also removed a tweet from a Fox News broadcaster in which she said the drug had shown “promising results.”

And in what may have been a first, Facebook removed information posted by Brazilian President Jair Bolsonaro, who claimed hydroxychloroquine was “working in every place” to treat coronavirus. Twitter also removed a linked video.

Brazil's President Jair Bolsonaro is pictured with his protective face mask at a press statement during the coronavirus disease (COVID-19) outbreak in Brasilia, Brazil, March 20, 2020. Picture taken March 20, 2020. (REUTERS/Ueslei Marcelino)

Facebook, Twitter, Google and others have increased their use of machine learning tools to identify false information. They also have put in place new restrictions on publishing misinformation.

Dipayan Ghosh is co-director of the Platform Accountability Project at the Harvard Kennedy School in Cambridge, Massachusetts. He told The Associated Press that technology companies have learned that the publication of misinformation about the coronavirus can have tragic results.

“They don’t want to be held responsible in any way for perpetuating rumors that could lead directly to death,” Ghosh said.

For example, the Facebook-owned private messaging service WhatsApp has put a limit on the number of people users can forward messages to. WhatsApp hopes this helps limit the spread of COVID-19 misinformation.

In this March 19, 2020, file photo, the Manhattan bridge is seen in the background of a flashing sign urging commuters to avoid gatherings, reduce crowding and to wash hands in the Brooklyn borough of New York. The coronavirus pandemic is leading to infor

Facebook also recently announced that it would start warning users if they have reacted to or shared false or harmful claims about COVID-19. The company says it will start sending such warning messages in the coming weeks. The users will also be directed to a website where the WHO lists and debunks misinformation about the coronavirus.

In addition to efforts to reduce false information, technology companies have noted they are widely publishing facts about the virus from trusted news sources and health officials. They are also making that information easy for users to find.

The WHO’s Andy Pattison praises those efforts, too; more correct information can help reduce the level of misinformation, he said.

“People will fill the void out of fear,” he added.

Words in This Story

perpetuate – v. to make something continue, especially something bad

rumor – n. information or a story that is passed from person to person but has not been proven true

debunk – v. to show evidence that something is not true

void – n. a large hole or empty space